Microsoft DP-700 Übungsprüfungen

Zuletzt aktualisiert am 27.12.2025- Prüfungscode: DP-700

- Prüfungsname: Microsoft Fabric Data Engineer

- Zertifizierungsanbieter: Microsoft

- Zuletzt aktualisiert am: 27.12.2025

You have a Fabric workspace named Workspace1 that contains an Apache Spark job definition named Job1.

You have an Azure SQL database named Source1 that has public internet access disabled.

You need to ensure that Job1 can access the data in Source1.

What should you create?

- A . an on-premises data gateway

- B . a managed private endpoint

- C . an integration runtime

- D . a data management gateway

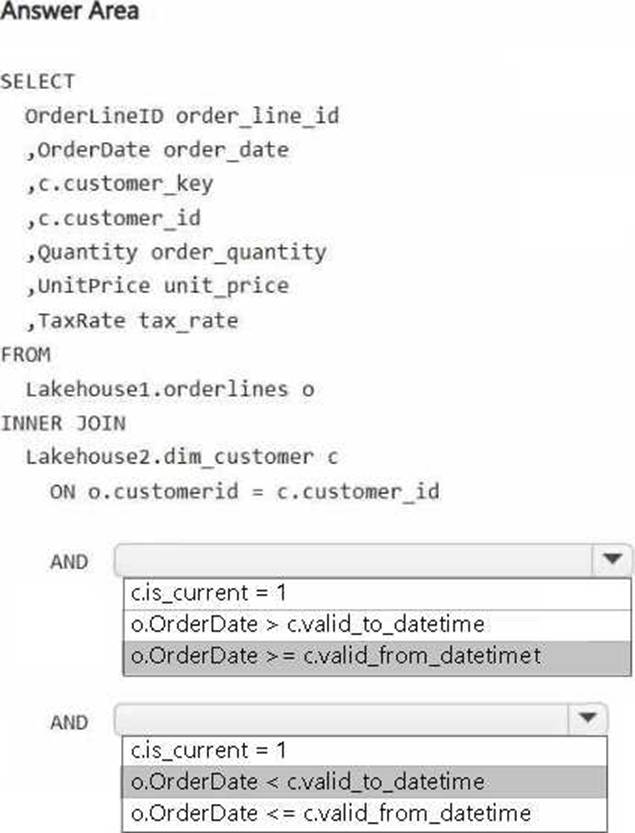

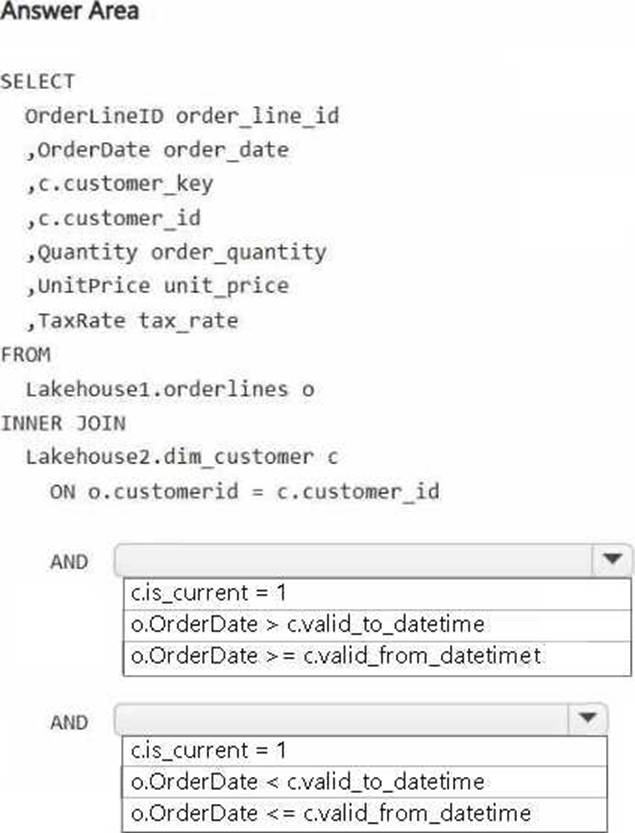

HOTSPOT

You have a Fabric workspace that contains two lakehouses named Lakehouse1 and Lakehouse2. Lakehouse1 contains staging data in a Delta table named Orderlines. Lakehouse2 contains a Type 2 slowly changing dimension (SCD) dimension table named Dim_Customer.

You need to build a query that will combine data from Orderlines and Dim_Customer to create a new fact table named Fact_Orders. The new table must meet the following requirements: Enable the analysis of customer orders based on historical attributes.

Enable the analysis of customer orders based on the current attributes.

How should you complete the statement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

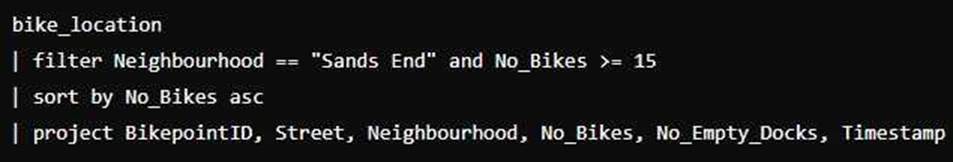

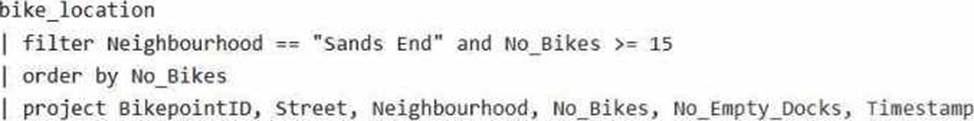

You have a Fabric eventstream that loads data into a table named Bike_Location in a KQL database.

The table contains the following columns:

– BikepointID

– Street

– Neighbourhood

– No_Bikes

– No_Empty_Docks

– Timestamp

You need to apply transformation and filter logic to prepare the data for consumption. The solution must return data for a neighbourhood named Sands End when No_Bikes is at least 15. The results must be ordered by No_Bikes in ascending order.

Solution: You use the following code segment:

Does this meet the goal?

- A . Yes

- B . no

You have a Fabric workspace named Workspace1.

You plan to integrate Workspace1 with Azure DevOps.

You will use a Fabric deployment pipeline named deployPipeline1 to deploy items from Workspace1 to higher environment workspaces as part of a medallion architecture. You will run deployPipeline1 by using an API call from an Azure DevOps pipeline.

You need to configure API authentication between Azure DevOps and Fabric.

Which type of authentication should you use?

- A . service principal

- B . Microsoft Entra username and password

- C . managed private endpoint

- D . workspace identity

You have a Fabric workspace that contains a warehouse named Warehouse1.

You have an on-premises Microsoft SQL Server database named Database1 that is accessed by using an on-premises data gateway.

You need to copy data from Database1 to Warehouse1.

Which item should you use?

- A . an Apache Spark job definition

- B . a data pipeline

- C . a Dataflow Gen1 dataflow

- D . an eventstream

You need to schedule the population of the medallion layers to meet the technical requirements.

What should you do?

- A . Schedule a data pipeline that calls other data pipelines.

- B . Schedule a notebook.

- C . Schedule an Apache Spark job.

- D . Schedule multiple data pipelines.

You have a Fabric workspace.

You have semi-structured data.

You need to read the data by using T-SQL, KQL, and Apache Spark. The data will only be written by using Spark.

What should you use to store the data?

- A . a lakehouse

- B . an eventhouse

- C . a datamart

- D . a warehouse

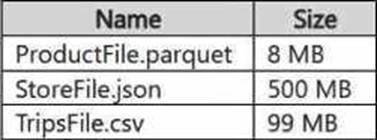

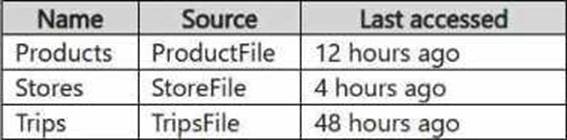

You have a Google Cloud Storage (GCS) container named storage1 that contains the files shown in the following table.

You have a Fabric workspace named Workspace1 that has the cache for shortcuts enabled. Workspace1 contains a lakehouse named Lakehouse1.

Lakehouse1 has the shortcuts shown in the following table.

You need to read data from all the shortcuts.

Which shortcuts will retrieve data from the cache?

- A . Stores only

- B . Products only

- C . Stores and Products only

- D . Products, Stores, and Trips

- E . Trips only

- F . Products and Trips only

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

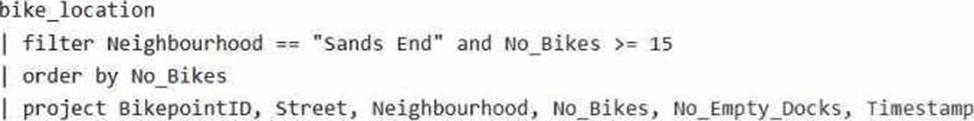

You have a Fabric eventstream that loads data into a table named Bike_Location in a KQL database.

The table contains the following columns:

– BikepointID

– Street

– Neighbourhood

– No_Bikes

– No_Empty_Docks

– Timestamp

You need to apply transformation and filter logic to prepare the data for consumption. The solution must return data for a neighbourhood named Sands End when No_Bikes is at least 15. The results must be ordered by No_Bikes in ascending order.

Solution: You use the following code segment:

Does this meet the goal?

- A . Yes

- B . no

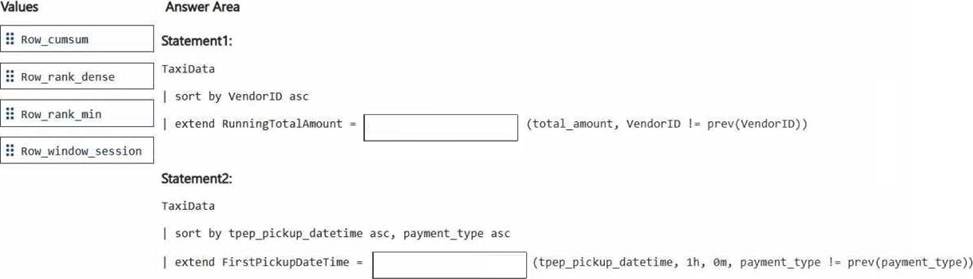

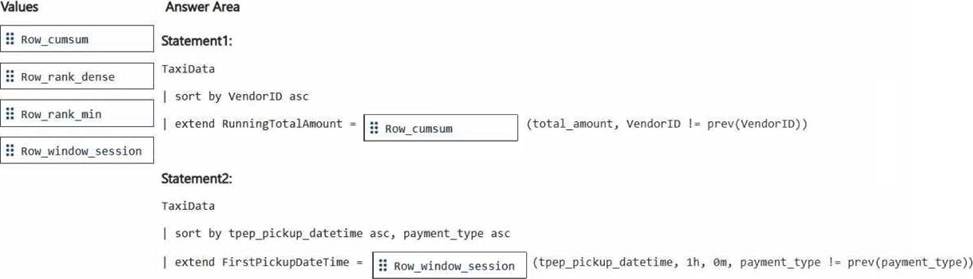

DRAG DROP

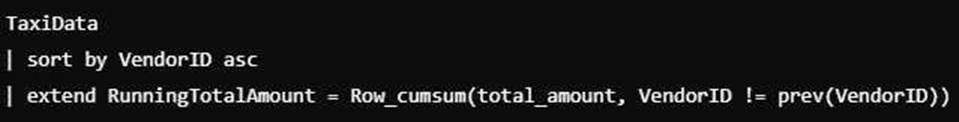

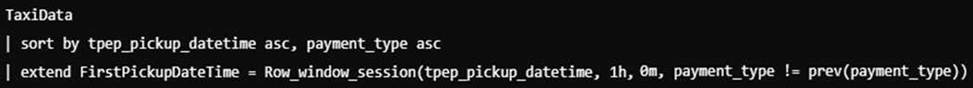

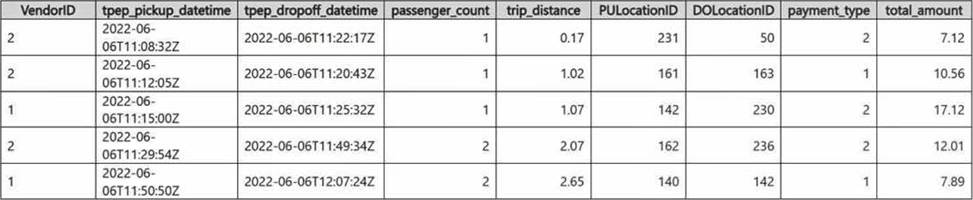

You have a Fabric eventhouse that contains a KQL database. The database contains a table named TaxiData.

The following is a sample of the data in TaxiData.

You need to build two KQL queries. The solution must meet the following requirements:

– One of the queries must partition RunningTotalAmount by VendorID.

– The other query must create a column named FirstPickupDateTime that shows the first value of each hour from tpep_pickup_datetime partitioned by payment_type.

How should you complete each query? To answer, drag the appropriate values the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.