Databricks Databricks Certified Data Engineer Professional Übungsprüfungen

Zuletzt aktualisiert am 26.04.2025- Prüfungscode: Databricks Certified Data Engineer Professional

- Prüfungsname: Databricks Certified Data Engineer Professional Exam

- Zertifizierungsanbieter: Databricks

- Zuletzt aktualisiert am: 26.04.2025

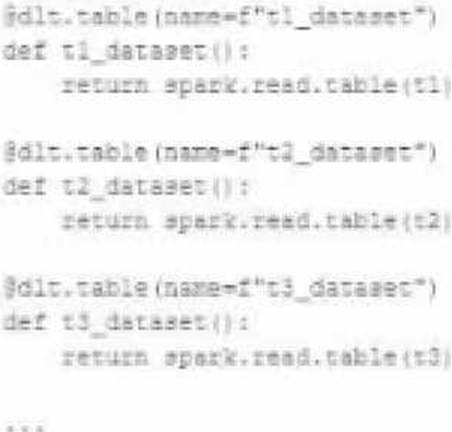

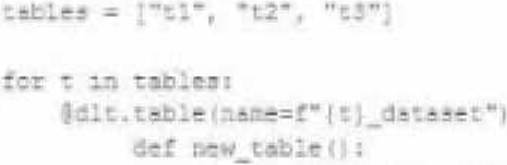

A data engineer wants to reflector the following DLT code, which includes multiple definition with very similar code:

In an attempt to programmatically create these tables using a parameterized table definition, the data engineer writes the following code.

The pipeline runs an update with this refactored code, but generates a different DAG showing

incorrect configuration values for tables.

How can the data engineer fix this?

- A . Convert the list of configuration values to a dictionary of table settings, using table names as keys.

- B . Convert the list of configuration values to a dictionary of table settings, using different input the for loop.

- C . Load the configuration values for these tables from a separate file, located at a path provided by a pipeline parameter.

- D . Wrap the loop inside another table definition, using generalized names and properties to replace with those from the inner table

In which scenario would a Databricks workspace administrator configure clusters to terminate after 30 minutes of inactivity?

- A . To control costs

- B . To increase performance

- C . To reserve resources for high-priority jobs

- D . To enforce security best practices

- E . To reduce the risk of data leaks

A junior data engineer has manually configured a series of jobs using the Databricks Jobs UI. Upon reviewing their work, the engineer realizes that they are listed as the "Owner" for each job. They attempt to transfer "Owner" privileges to the "DevOps" group, but cannot successfully accomplish this task.

Which statement explains what is preventing this privilege transfer?

- A . Databricks jobs must have exactly one owner; "Owner" privileges cannot be assigned to a group.

- B . The creator of a Databricks job will always have "Owner" privileges; this configuration cannot be changed.

- C . Other than the default "admins" group, only individual users can be granted privileges on jobs.

- D . A user can only transfer job ownership to a group if they are also a member of that group.

- E . Only workspace administrators can grant "Owner" privileges to a group.

Which REST API call can be used to review the notebooks configured to run as tasks in a multi-task job?

- A . /jobs/runs/list

- B . /jobs/runs/get-output

- C . /jobs/runs/get

- D . /jobs/get

- E . /jobs/list

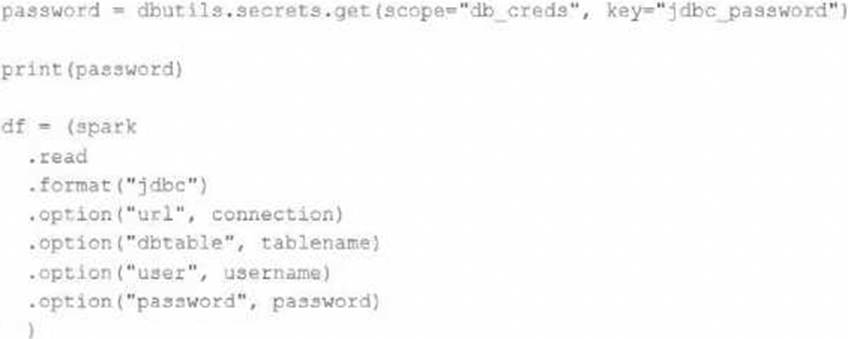

The security team is exploring whether or not the Databricks secrets module can be leveraged for connecting to an external database.

After testing the code with all Python variables being defined with strings, they upload the password to the secrets module and configure the correct permissions for the currently active user. They then modify their code to the following (leaving all other variables unchanged).

Which statement describes what will happen when the above code is executed?

- A . The connection to the external table will fail; the string "redacted" will be printed.

- B . An interactive input box will appear in the notebook; if the right password is provided, the connection will succeed and the encoded password will be saved to DBFS.

- C . An interactive input box will appear in the notebook; if the right password is provided, the connection will succeed and the password will be printed in plain text.

- D . The connection to the external table will succeed; the string value of password will be printed in plain text.

- E . The connection to the external table will succeed; the string "redacted" will be printed.

When evaluating the Ganglia Metrics for a given cluster with 3 executor nodes, which indicator would signal proper utilization of the VM’s resources?

- A . The five Minute Load Average remains consistent/flat

- B . Bytes Received never exceeds 80 million bytes per second

- C . Network I/O never spikes

- D . Total Disk Space remains constant

- E . CPU Utilization is around 75%

The marketing team is looking to share data in an aggregate table with the sales organization, but the field names used by the teams do not match, and a number of marketing specific fields have not been approval for the sales org.

Which of the following solutions addresses the situation while emphasizing simplicity?

- A . Create a view on the marketing table selecting only these fields approved for the sales team alias the names of any fields that should be standardized to the sales naming conventions.

- B . Use a CTAS statement to create a derivative table from the marketing table configure a production jon to propagation changes.

- C . Add a parallel table write to the current production pipeline, updating a new sales table that varies as required from marketing table.

- D . Create a new table with the required schema and use Delta Lake’s DEEP CLONE functionality to sync up changes committed to one table to the corresponding table.

Which statement describes Delta Lake Auto Compaction?

- A . An asynchronous job runs after the write completes to detect if files could be further compacted;

if yes, an optimize job is executed toward a default of 1 GB. - B . Before a Jobs cluster terminates, optimize is executed on all tables modified during the most recent job.

- C . Optimized writes use logical partitions instead of directory partitions; because partition boundaries are only represented in metadata, fewer small files are written.

- D . Data is queued in a messaging bus instead of committing data directly to memory; all data is committed from the messaging bus in one batch once the job is complete.

- E . An asynchronous job runs after the write completes to detect if files could be further compacted; if yes, an optimize job is executed toward a default of 128 MB.

What statement is true regarding the retention of job run history?

- A . It is retained until you export or delete job run logs

- B . It is retained for 30 days, during which time you can deliver job run logs to DBFS or S3

- C . t is retained for 60 days, during which you can export notebook run results to HTML

- D . It is retained for 60 days, after which logs are archived

- E . It is retained for 90 days or until the run-id is re-used through custom run configuration

Which distribution does Databricks support for installing custom Python code packages?

- A . sbt

- B . CRAN

- C . CRAM

- D . nom

- E . Wheels

- F . jars