Amazon MLA-C01 Übungsprüfungen

Zuletzt aktualisiert am 27.04.2025- Prüfungscode: MLA-C01

- Prüfungsname: AWS Certified Machine Learning Engineer - Associate

- Zertifizierungsanbieter: Amazon

- Zuletzt aktualisiert am: 27.04.2025

You are a data scientist working for a media company that processes large volumes of video and image data to generate personalized content recommendations. The dataset, which is stored in Amazon S3, contains tens of millions of small image files and several terabytes of high-resolution large video files. The training jobs you run on Amazon SageMaker require low-latency access to this data and need to be completed quickly to keep up with the dynamic content pipeline.

Given the characteristics of your data and the requirements for low-latency, high-throughput access, which approach is the MOST APPROPRIATE for this scenario?

- A . Use Fast File mode with Amazon S3 for the large video files, enabling on-demand streaming of data, and store the small image files locally on the training instances to reduce I/O latency

- B . Use Amazon FSx for Lustre to mount the entire dataset as a high-performance file system, providing consistently low-latency access to both the small image files and the large video files

- C . Create an FSx for Lustre file system linked with the Amazon S3 bucket folder having the training data for the small image files and apply Fast File mode for the video files in the relevant Amazon S3 bucket folder, thereby combining the strengths of both approaches

- D . Use Fast File mode with Amazon S3 to stream the small image files directly to the training instances on-demand, minimizing the time required to start training

Which of the following highlights the differences between model parameters and hyperparameters in the context of generative AI?

- A . Both Hyperparameters and model parameters are values that define a model and its behavior in interpreting input and generating responses

- B . Model parameters are values that define a model and its behavior in interpreting input and generating responses. Hyperparameters are values that can be adjusted for model customization to control the training process

- C . Hyperparameters are values that define a model and its behavior in interpreting input and generating responses. Model parameters are values that can be adjusted for model customization to control the training process

- D . Both Hyperparameters and model parameters are values that can be adjusted for model customization to control the training process

You are a machine learning engineer at a fintech company responsible for maintaining the ML infrastructure that powers real-time credit scoring for loan applications. The system must handle high volumes of requests with low latency and be resilient to any failures. To ensure the infrastructure meets the company’s performance and reliability requirements, you need to monitor key performance metrics related to scalability, availability, utilization, throughput and fault tolerance.

Which combination of metrics and monitoring strategies is the MOST EFFECTIVE for ensuring the ML infrastructure meets these requirements?

- A . Monitor CPU and memory utilization to ensure that compute resources are not overburdened, track request throughput to measure the number of predictions per second, and use auto-scaling policies to maintain high availability and scalability during traffic spikes

- B . Track model accuracy and precision to ensure that predictions are correct, monitor disk space utilization to prevent storage overflows, and manually scale the infrastructure during peak usage periods

- C . Monitor training loss and validation accuracy to track model performance, measure network bandwidth to ensure efficient data transfer, and use Amazon CloudWatch alarms to automatically restart failed instances

- D . Measure latency to ensure predictions are delivered quickly, monitor the number of failed requests to assess fault tolerance, and use scheduled scaling to adjust resources based on anticipated demand

You are a machine learning engineer at a financial services company tasked with building a real-time fraud detection system. The model needs to be highly accurate to minimize false positives and false negatives. However, the company has a limited budget for cloud resources, and the model needs to be retrained frequently to adapt to new fraud patterns. You must carefully balance model performance, training time, and cost to meet these requirements.

Which of the following strategies is the MOST LIKELY to achieve an optimal balance between model performance, training time, and cost?

- A . Deploy a simpler model like logistic regression to reduce training time and cost, while accepting a slight reduction in model accuracy

- B . Implement a tree-based model like XGBoost with early stopping and hyperparameter tuning, balancing accuracy with reduced training time and computational cost

- C . Use a deep neural network with multiple layers and complex architecture to maximize performance, even if it requires significant computational resources and longer training times

- D . Choose a support vector machine (SVM) with a nonlinear kernel to enhance accuracy, regardless of

the increased training time and cost associated with large datasets

How is deep learning different from general machine learning?

- A . It requires no domain knowledge.

- B . It uses a layered architecture mimicking the human brain.

- C . It relies solely on historical data.

- D . It is unrelated to pattern recognition.

Which AWS services are specifically designed to aid in monitoring machine learning models and incorporating human review processes? (Select two)

- A . Amazon SageMaker Feature Store

- B . Amazon SageMaker Ground Truth

- C . Amazon Augmented AI (Amazon A2I)

- D . Amazon SageMaker Data Wrangler

- E . Amazon SageMaker Model Monitor

Which AWS services are specifically designed to aid in monitoring machine learning models and incorporating human review processes? (Select two)

- A . Amazon SageMaker Feature Store

- B . Amazon SageMaker Ground Truth

- C . Amazon Augmented AI (Amazon A2I)

- D . Amazon SageMaker Data Wrangler

- E . Amazon SageMaker Model Monitor

You are a data scientist at a healthcare company working on deploying a machine learning model that predicts patient outcomes based on real-time data from wearable devices. The model needs to be containerized for easy deployment and scaling across different environments, including development, testing, and production. The company wants to ensure that container images are managed efficiently, securely, and consistently across all environments.

Given these requirements, which combination of AWS services is the MOST SUITABLE for building, storing, deploying, and maintaining the containerized ML solution?

- A . Use Docker Hub to store the container images, Amazon EKS for orchestrating the containers, and AWS Lambda to trigger updates to the containers when new images are pushed

- B . Use Amazon ECR to store the container images, Amazon EKS for orchestrating the containers, and AWS CodePipeline for automating the CI/CD pipeline, ensuring that updates to the model are seamlessly deployed

- C . Use Amazon ECR to store container images, manually deploy containers on Amazon EC2 instances, and use AWS CloudFormation to manage the infrastructure configuration

- D . Use Amazon ECS to manage and deploy the containerized model, Amazon S3 to store container images, and manually push updates to the containers using the AWS CLI

You are a machine learning engineer at a fintech company tasked with developing and deploying an end-to-end machine learning workflow for fraud detection. The workflow involves multiple steps, including data extraction, preprocessing, feature engineering, model training, hyperparameter tuning, and deployment. The company requires the solution to be scalable, support complex dependencies between tasks, and provide robust monitoring and versioning capabilities. Additionally, the workflow needs to integrate seamlessly with existing AWS services.

Which deployment orchestrator is the MOST SUITABLE for managing and automating your ML workflow?

- A . Use AWS Step Functions to build a serverless workflow that integrates with SageMaker for model training and deployment, ensuring scalability and fault tolerance

- B . Use AWS Lambda functions to manually trigger each step of the ML workflow, enabling flexible execution without needing a predefined orchestration tool

- C . Use Amazon SageMaker Pipelines to orchestrate the entire ML workflow, leveraging its built-in integration with SageMaker features like training, tuning, and deployment

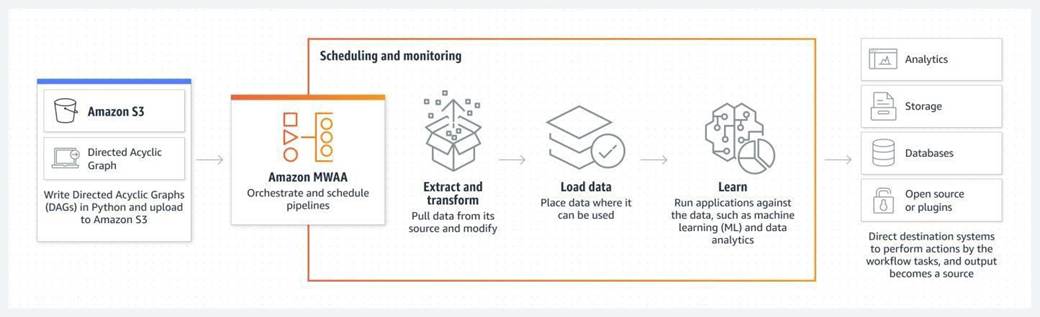

- D . Use Apache Airflow to define and manage the workflow with custom DAGs (Directed Acyclic

Graphs), integrating with AWS services through operators and hooks

What is Feature Engineering in the context of machine learning?

- A . Feature Engineering involves selecting, modifying, or creating features from raw data to improve the performance of machine learning models, and it is important because it can significantly enhance model accuracy and efficiency

- B . Feature Engineering refers to the visualization of data to understand patterns, and it is important because it helps in identifying trends in the dataset

- C . Feature Engineering is the process of tuning hyperparameters in a machine learning model, and it is important because it optimizes the model’s performance

- D . Feature Engineering is the process of collecting raw data, and it is important because it ensures the availability of data for model training