Databricks Databricks Machine Learning Associate Übungsprüfungen

Zuletzt aktualisiert am 19.12.2025- Prüfungscode: Databricks Machine Learning Associate

- Prüfungsname: Databricks Certified Machine Learning Associate Exam

- Zertifizierungsanbieter: Databricks

- Zuletzt aktualisiert am: 19.12.2025

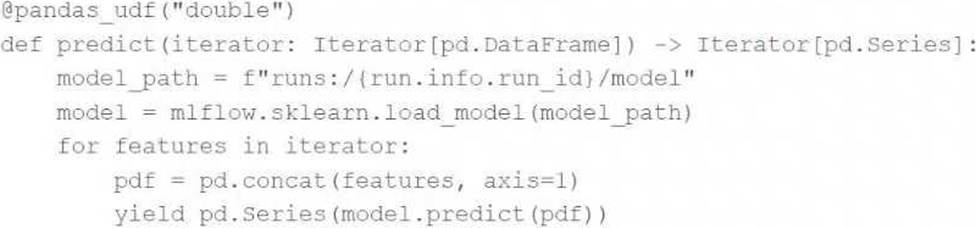

A data scientist has defined a Pandas UDF function predict to parallelize the inference process for a single-node model:

They have written the following incomplete code block to use predict to score each record of Spark DataFrame spark_df:

Which of the following lines of code can be used to complete the code block to successfully complete the task?

- A . predict(*spark_df.columns)

- B . mapInPandas(predict)

- C . predict(Iterator(spark_df))

- D . mapInPandas(predict(spark_df.columns))

- E . predict(spark_df.columns)

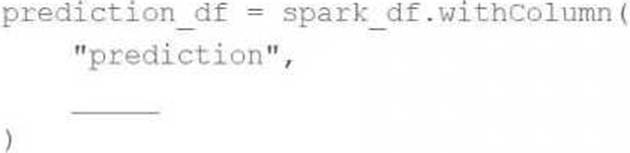

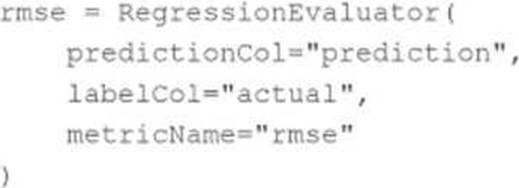

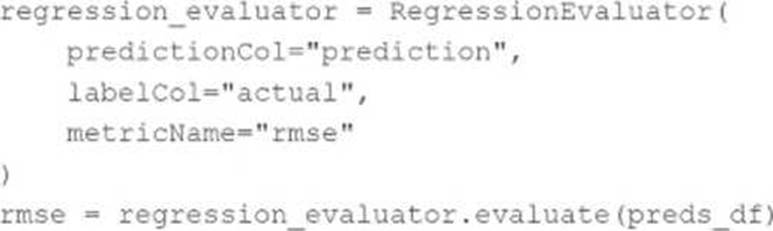

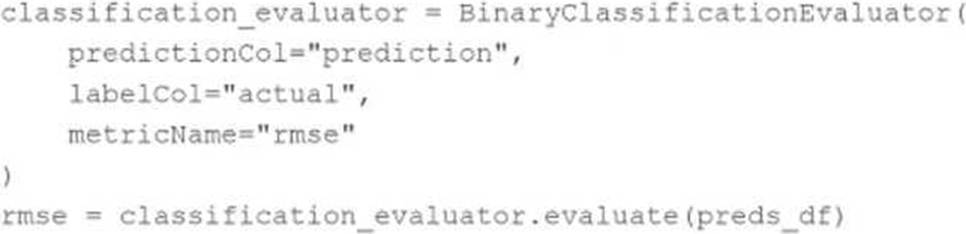

A data scientist has developed a linear regression model using Spark ML and computed the predictions in a Spark DataFrame preds_df with the following schema: prediction DOUBLE actual DOUBLE

Which of the following code blocks can be used to compute the root mean-squared-error of the model according to the data in preds_df and assign it to the rmse variable?

A)

B)

C)

D)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

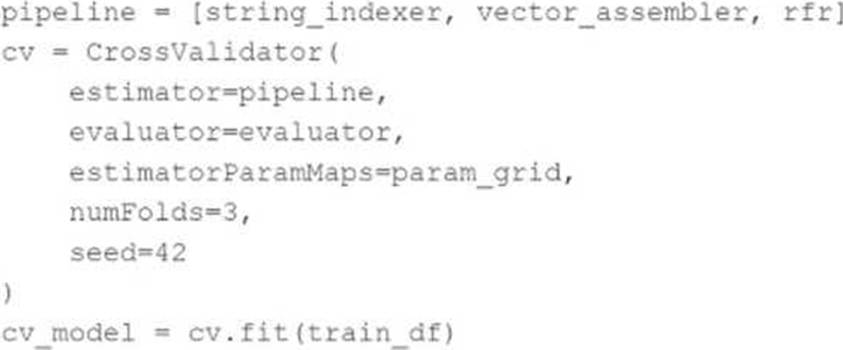

A data scientist has developed a random forest regressor rfr and included it as the final stage in a Spark MLPipeline pipeline.

They then set up a cross-validation process with pipeline as the estimator in the following code block:

Which of the following is a negative consequence of including pipeline as the estimator in the cross-validation process rather than rfr as the estimator?

- A . The process will have a longer runtime because all stages of pipeline need to be refit or retransformed with each mode

- B . The process will leak data from the training set to the test set during the evaluation phase

- C . The process will be unable to parallelize tuning due to the distributed nature of pipeline

- D . The process will leak data prep information from the validation sets to the training sets for each model

Which of the following evaluation metrics is not suitable to evaluate runs in AutoML experiments for regression problems?

- A . F1

- B . R-squared

- C . MAE

- D . MSE

A new data scientist has started working on an existing machine learning project. The project is a scheduled Job that retrains every day. The project currently exists in a Repo in Databricks. The data scientist has been tasked with improving the feature engineering of the pipeline’s preprocessing stage. The data scientist wants to make necessary updates to the code that can be easily adopted into the project without changing what is being run each day.

Which approach should the data scientist take to complete this task?

- A . They can create a new branch in Databricks, commit their changes, and push those changes to the Git provider.

- B . They can clone the notebooks in the repository into a Databricks Workspace folder and make the necessary changes.

- C . They can create a new Git repository, import it into Databricks, and copy and paste the existing code from the original repository before making changes.

- D . They can clone the notebooks in the repository into a new Databricks Repo and make the necessary changes.

A data scientist is using MLflow to track their machine learning experiment. As a part of each of their MLflow runs, they are performing hyperparameter tuning. The data scientist would like to have one parent run for the tuning process with a child run for each unique combination of hyperparameter values. All parent and child runs are being manually started with mlflow.start_run.

Which of the following approaches can the data scientist use to accomplish this MLflow run organization?

- A . They can turn on Databricks Autologging

- B . They can specify nested=True when starting the child run for each unique combination of hyperparameter values

- C . They can start each child run inside the parent run’s indented code block using mlflow.start runO

- D . They can start each child run with the same experiment ID as the parent run

- E . They can specify nested=True when starting the parent run for the tuning process

A data scientist has produced two models for a single machine learning problem. One of the models performs well when one of the features has a value of less than 5, and the other model performs well when the value of that feature is greater than or equal to 5. The data scientist decides to combine the two models into a single machine learning solution.

Which of the following terms is used to describe this combination of models?

- A . Bootstrap aggregation

- B . Support vector machines

- C . Bucketing

- D . Ensemble learning

- E . Stacking

A data scientist is using the following code block to tune hyperparameters for a machine learning model:

Which change can they make the above code block to improve the likelihood of a more accurate model?

- A . Increase num_evals to 100

- B . Change fmin() to fmax()

- C . Change sparkTrials() to Trials()

- D . Change tpe.suggest to random.suggest

In Spark, how would a data scientist filter a DataFrame `spark_df` to include only rows where the column ‚price‘ is greater than 0?

- A . `spark_df.filter(col("price") > 0)`

- B . `spark_df[spark_df["price"] > 0]`

- C . `SELECT * FROM spark_df WHERE price > 0`

- D . `spark_df.where("price > 0")`

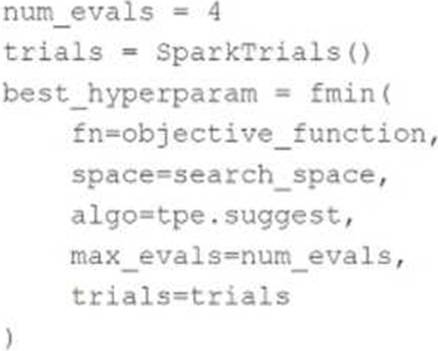

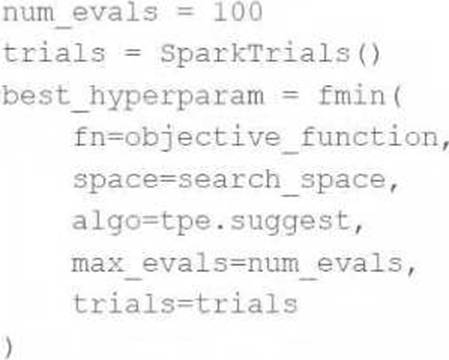

A data scientist wants to tune a set of hyperparameters for a machine learning model. They have wrapped a Spark ML model in the objective function objective_function and they have defined the search space search_space.

As a result, they have the following code block:

Which of the following changes do they need to make to the above code block in order to accomplish the task?

- A . Change SparkTrials() to Trials()

- B . Reduce num_evals to be less than 10

- C . Change fmin() to fmax()

- D . Remove the trials=trials argument

- E . Remove the algo=tpe.suggest argument