Databricks Databricks Machine Learning Associate Übungsprüfungen

Zuletzt aktualisiert am 26.04.2025- Prüfungscode: Databricks Machine Learning Associate

- Prüfungsname: Databricks Certified Machine Learning Associate Exam

- Zertifizierungsanbieter: Databricks

- Zuletzt aktualisiert am: 26.04.2025

What function in PySpark’s DataFrame API provides summary statistics?

- A . `spark_df.describe()`

- B . `spark_df.summary()`

- C . `spark_df.statistics()`

- D . `spark_df.info()`

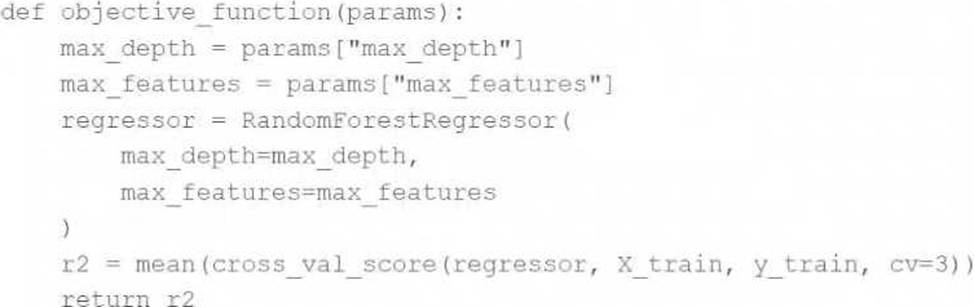

A data scientist wants to efficiently tune the hyperparameters of a scikit-learn model. They elect to use the Hyperopt library’s fmin operation to facilitate this process. Unfortunately, the final model is not very accurate. The data scientist suspects that there is an issue with the objective_function being passed as an argument to fmin.

They use the following code block to create the objective_function:

Which of the following changes does the data scientist need to make to their objective_function in order to produce a more accurate model?

- A . Add test set validation process

- B . Add a random_state argument to the RandomForestRegressor operation

- C . Remove the mean operation that is wrapping the cross_val_score operation

- D . Replace the r2 return value with -r2

- E . Replace the fmin operation with the fmax operation

A machine learning engineer is trying to scale a machine learning pipeline by distributing its single-node model tuning process. After broadcasting the entire training data onto each core, each core in the cluster can train one model at a time. Because the tuning process is still running slowly, the engineer wants to increase the level of parallelism from 4 cores to 8 cores to speed up the tuning process. Unfortunately, the total memory in the cluster cannot be increased.

In which of the following scenarios will increasing the level of parallelism from 4 to 8 speed up the tuning process?

- A . When the tuning process in randomized

- B . When the entire data can fit on each core

- C . When the model is unable to be parallelized

- D . When the data is particularly long in shape

- E . When the data is particularly wide in shape

A data scientist is wanting to explore summary statistics for Spark DataFrame spark_df. The data scientist wants to see the count, mean, standard deviation, minimum, maximum, and interquartile range (IQR) for each numerical feature.

Which of the following lines of code can the data scientist run to accomplish the task?

- A . spark_df.summary ()

- B . spark_df.stats()

- C . spark_df.describe().head()

- D . spark_df.printSchema()

- E . spark_df.toPandas()

A data scientist has been given an incomplete notebook from the data engineering team. The notebook uses a Spark DataFrame spark_df on which the data scientist needs to perform further feature engineering. Unfortunately, the data scientist has not yet learned the PySpark DataFrame API.

Which of the following blocks of code can the data scientist run to be able to use the pandas API on Spark?

- A . import pyspark.pandas as ps

df = ps.DataFrame(spark_df) - B . import pyspark.pandas as ps

df = ps.to_pandas(spark_df) - C . spark_df.to_sql()

- D . import pandas as pd

df = pd.DataFrame(spark_df) - E . spark_df.to_pandas()

Which of the Spark operations can be used to randomly split a Spark DataFrame into a training DataFrame and a test DataFrame for downstream use?

- A . TrainValidationSplit

- B . DataFrame.where

- C . CrossValidator

- D . TrainValidationSplitModel

- E . DataFrame.randomSplit

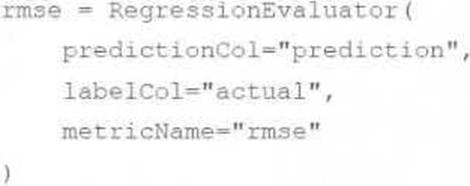

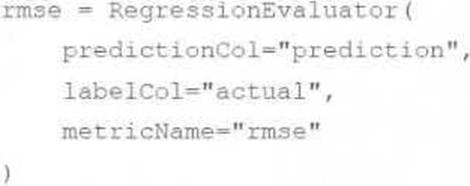

A data scientist has developed a linear regression model using Spark ML and computed the predictions in a Spark DataFrame preds_df with the following schema: prediction DOUBLE actual DOUBLE

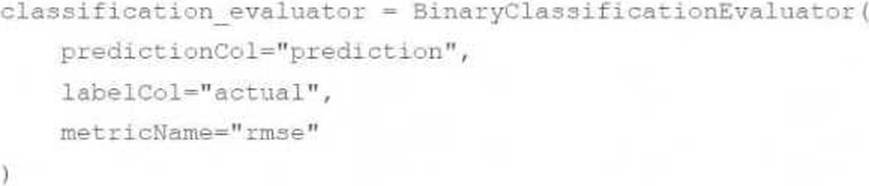

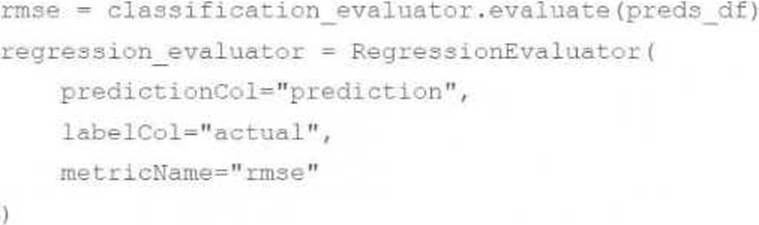

Which of the following code blocks can be used to compute the root mean-squared-error of the model according to the data in preds_df and assign it to the rmse variable?

A)

B)

C)

D)

E)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

A machine learning engineer is converting a decision tree from sklearn to Spark ML. They notice that they are receiving different results despite all of their data and manually specified hyperparameter values being identical.

Which of the following describes a reason that the single-node sklearn decision tree and the Spark ML decision tree can differ?

- A . Spark ML decision trees test every feature variable in the splitting algorithm

- B . Spark ML decision trees automatically prune overfit trees

- C . Spark ML decision trees test more split candidates in the splitting algorithm

- D . Spark ML decision trees test a random sample of feature variables in the splitting algorithm

- E . Spark ML decision trees test binned features values as representative split candidates

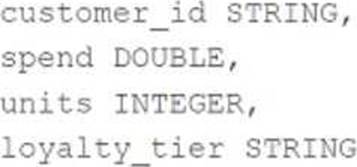

A data scientist is working with a feature set with the following schema:

The customer_id column is the primary key in the feature set. Each of the columns in the feature set has missing values. They want to replace the missing values by imputing a common value for each feature.

Which of the following lists all of the columns in the feature set that need to be imputed using the most common value of the column?

- A . customer_id, loyalty_tier

- B . loyalty_tier

- C . units

- D . spend

- E . customer_id

A data scientist learned during their training to always use 5-fold cross-validation in their model development workflow. A colleague suggests that there are cases where a train-validation split could be preferred over k-fold cross-validation when k > 2.

Which of the following describes a potential benefit of using a train-validation split over k-fold cross-validation in this scenario?

- A . A holdout set is not necessary when using a train-validation split

- B . Reproducibility is achievable when using a train-validation split

- C . Fewer hyperparameter values need to be tested when using a train-validation split

- D . Bias is avoidable when using a train-validation split

- E . Fewer models need to be trained when using a train-validation split