Microsoft DP-203 Übungsprüfungen

Zuletzt aktualisiert am 26.04.2025- Prüfungscode: DP-203

- Prüfungsname: Data Engineering on Microsoft Azure

- Zertifizierungsanbieter: Microsoft

- Zuletzt aktualisiert am: 26.04.2025

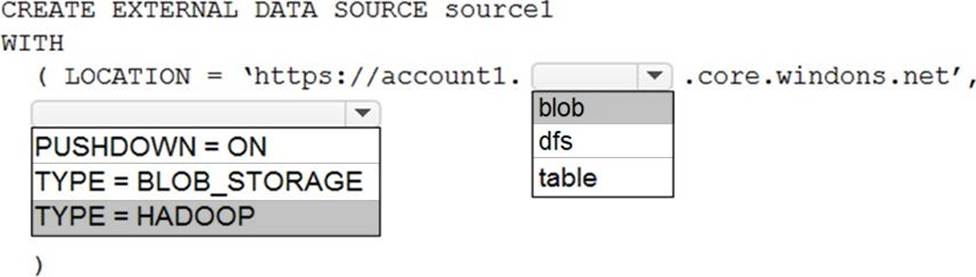

HOTSPOT

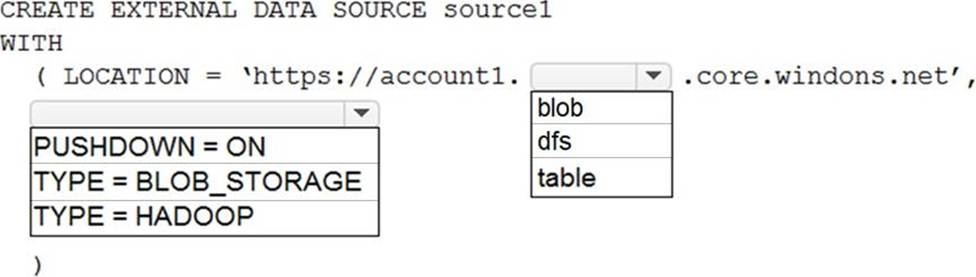

You have an Azure Synapse Analytics dedicated SQL pool named Pool1 and an Azure Data Lake Storage Gen2 account named Account1.

You plan to access the files in Account1 by using an external table.

You need to create a data source in Pool1 that you can reference when you create the external table.

How should you complete the Transact-SQL statement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You have an Azure Databricks resource.

You need to log actions that relate to changes in compute for the Databricks resource.

Which Databricks services should you log?

- A . clusters

- B . workspace

- C . DBFS

- D . SSH

E jobs

You are designing an Azure Databricks interactive cluster. The cluster will be used infrequently and will be configured for auto-termination.

You need to ensure that the cluster configuration is retained indefinitely after the cluster is terminated. The solution must minimize costs.

What should you do?

- A . Clone the cluster after it is terminated.

- B . Terminate the cluster manually when processing completes.

- C . Create an Azure runbook that starts the cluster every 90 days.

- D . Pin the cluster.

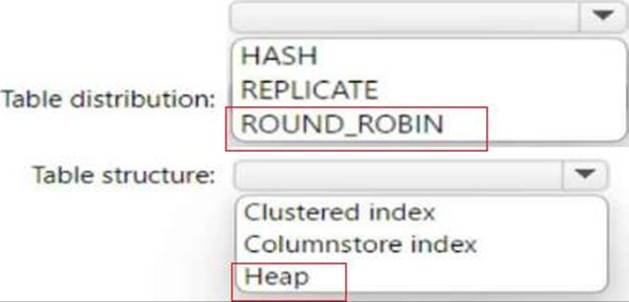

HOTSPOT

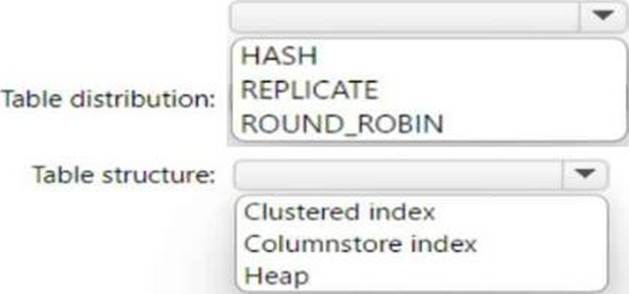

You are incrementally loading data into fact tables in an Azure Synapse Analytics dedicated SQL pool.

Each batch of incoming data is staged before being loaded into the fact tables. |

You need to ensure that the incoming data is staged as quickly as possible. |

How should you configure the staging tables? To answer, select the appropriate options in the answer area.

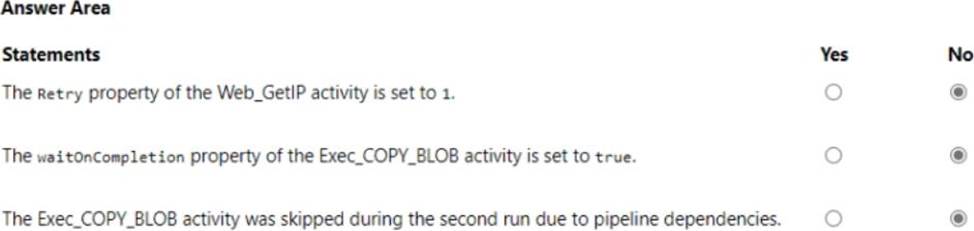

HOTSPOT

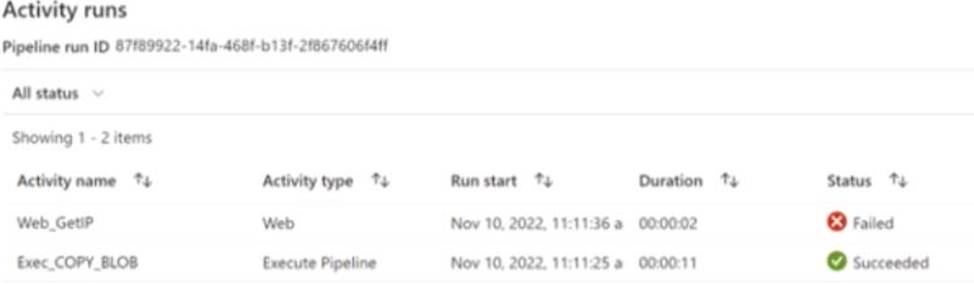

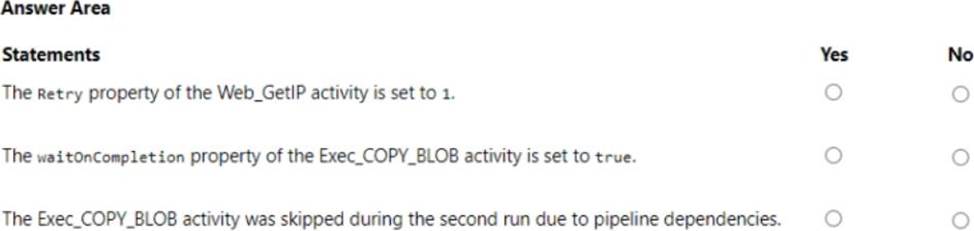

You have an Azure Data Factory pipeline shown the following exhibit.

The execution log for the first pipeline run is shown in the following exhibit.

The execution log for the second pipeline run is shown in the following exhibit.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

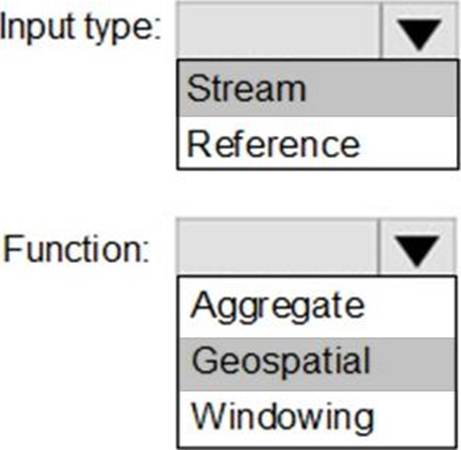

HOTSPOT

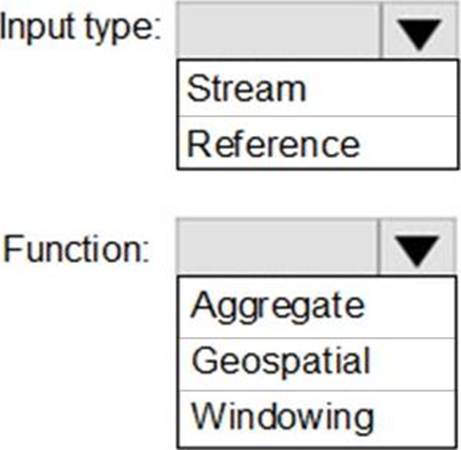

You plan to create a real-time monitoring app that alerts users when a device travels more than 200 meters away from a designated location.

You need to design an Azure Stream Analytics job to process the data for the planned app. The solution must minimize the amount of code developed and the number of technologies used.

What should you include in the Stream Analytics job? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You have an Azure Synapse Analystics dedicated SQL pool that contains a table named Contacts.

Contacts contains a column named Phone.

You need to ensure that users in a specific role only see the last four digits of a phone number when querying the Phone column.

What should you include in the solution?

- A . a default value

- B . dynamic data masking

- C . row-level security (RLS)

- D . column encryption

- E . table partitions

You have an Azure Data Lake Storage account that has a virtual network service endpoint configured.

You plan to use Azure Data Factory to extract data from the Data Lake Storage account. The data will then be loaded to a data warehouse in Azure Synapse Analytics by using PolyBase.

Which authentication method should you use to access Data Lake Storage?

- A . shared access key authentication

- B . managed identity authentication

- C . account key authentication

- D . service principal authentication

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are designing an Azure Stream Analytics solution that will analyze Twitter data.

You need to count the tweets in each 10-second window. The solution must ensure that each tweet is counted only once.

Solution: You use a hopping window that uses a hop size of 5 seconds and a window size 10 seconds.

Does this meet the goal?

- A . Yes

- B . No

You use Azure Data Lake Storage Gen2.

You need to ensure that workloads can use filter predicates and column projections to filter data at the time the data is read from disk.

Which two actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Reregister the Microsoft Data Lake Store resource provider.

- B . Reregister the Azure Storage resource provider.

- C . Create a storage policy that is scoped to a container.

- D . Register the query acceleration feature.

- E . Create a storage policy that is scoped to a container prefix filter.