Microsoft DP-203 Übungsprüfungen

Zuletzt aktualisiert am 28.04.2025- Prüfungscode: DP-203

- Prüfungsname: Data Engineering on Microsoft Azure

- Zertifizierungsanbieter: Microsoft

- Zuletzt aktualisiert am: 28.04.2025

HOTSPOT

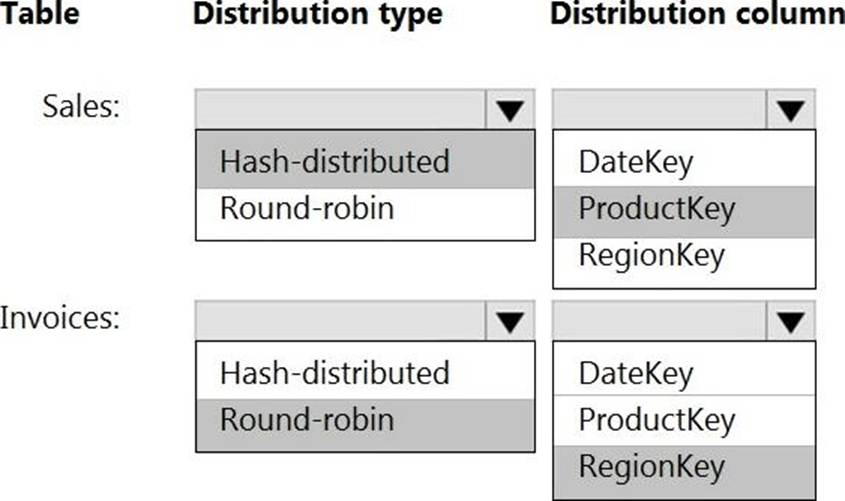

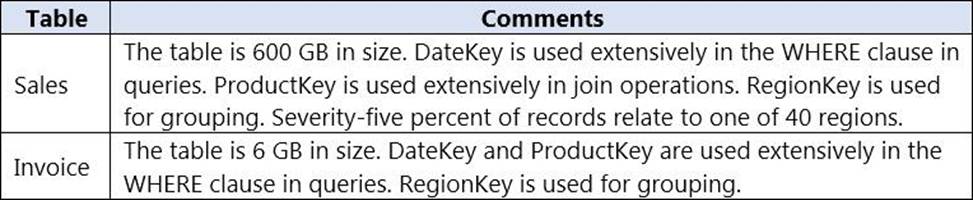

You have an on-premises data warehouse that includes the following fact tables. Both tables have the following columns: DateKey, ProductKey, RegionKey.

There are 120 unique product keys and 65 unique region keys.

Queries that use the data warehouse take a long time to complete.

You plan to migrate the solution to use Azure Synapse Analytics. You need to ensure that the Azure-based solution optimizes query performance and minimizes processing skew.

What should you recommend? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point

You are designing a security model for an Azure Synapse Analytics dedicated SQL pool that will support multiple companies. You need to ensure that users from each company can view only the data of their respective company.

Which two objects should you include in the solution? Each correct answer presents part of the solution

NOTE: Each correct selection it worth one point.

- A . a custom role-based access control (RBAC) role.

- B . asymmetric keys

- C . a predicate function

- D . a column encryption key

- E . a security policy

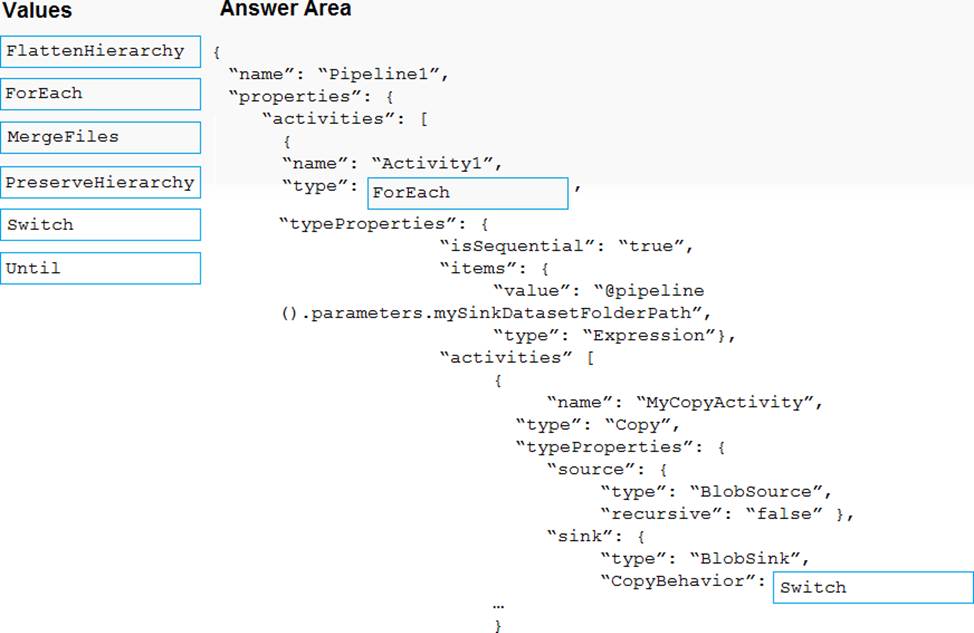

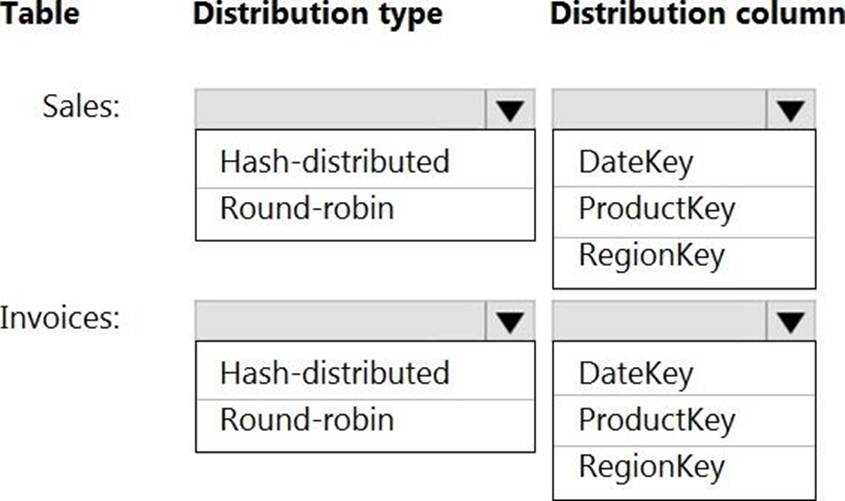

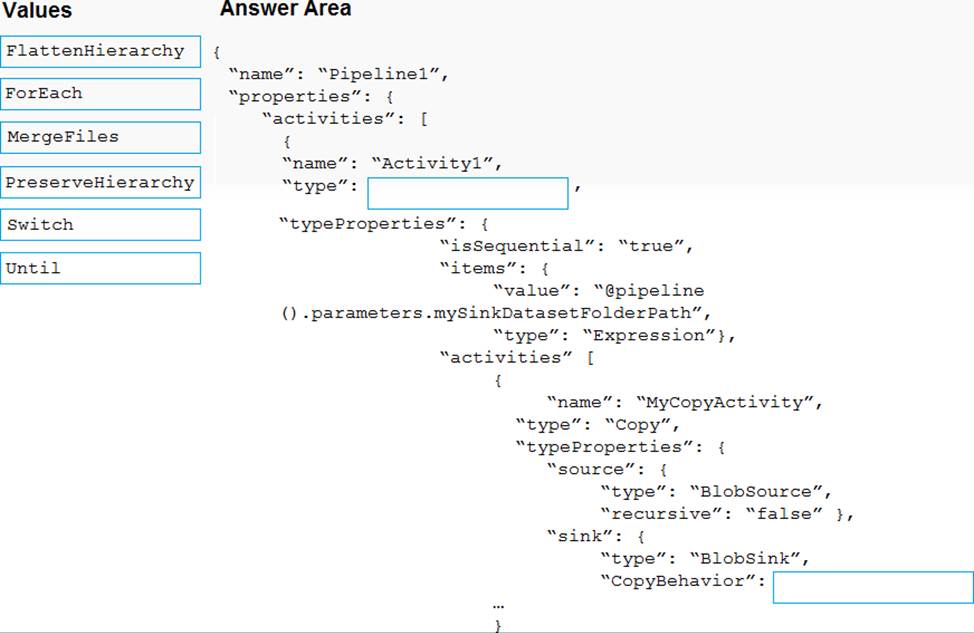

You have an Azure subscription that contains an Azure data factory.

You are editing an Azure Data Factory activity JSON.

The script needs to copy a file from Azure Blob Storage to multiple destinations. The solution must ensure that the source and destination files have consistent folder paths.

How should you complete the script? To answer, drag the appropriate values to the correct targets

Each value may be used once, more than once, or not at all You may need to drag the split bar

between panes or scroll to view content. NOTE: Each correct selection is worth one point

HOTSPOT

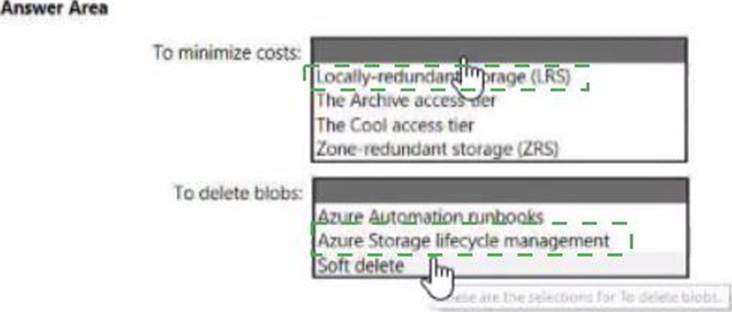

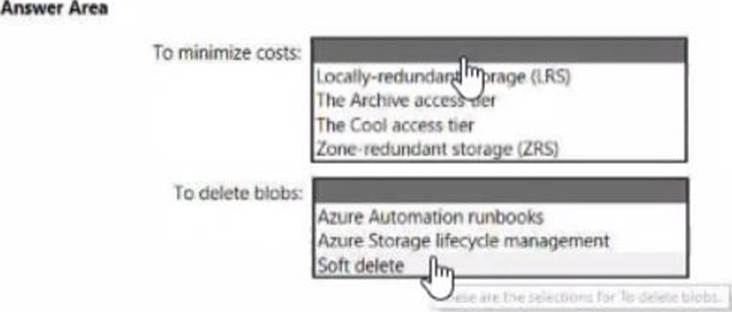

You have an Azure subscription.

You need to deploy an Azure Data Lake Storage Gen2 Premium account.

The solution must meet the following requirements:

• Blobs that are older than 365 days must be deleted.

• Administrator efforts must be minimized.

• Costs must be minimized

What should you use? To answer, select the appropriate options in the answer area. NOTE Each correct selection is worth one point.

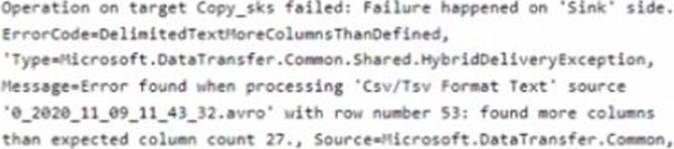

You have an Azure Data Lake Storage Gen2 account that contains two folders named Folder and Folder2.

You use Azure Data Factory to copy multiple files from Folder1 to Folder2.

You receive the following error.

What should you do to resolve the error.

- A . Add an explicit mapping.

- B . Enable fault tolerance to skip incompatible rows.

- C . Lower the degree of copy parallelism

- D . Change the Copy activity setting to Binary Copy

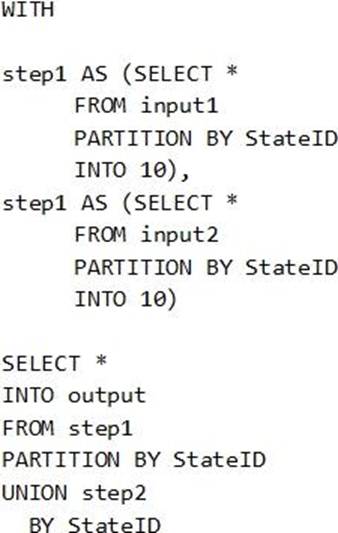

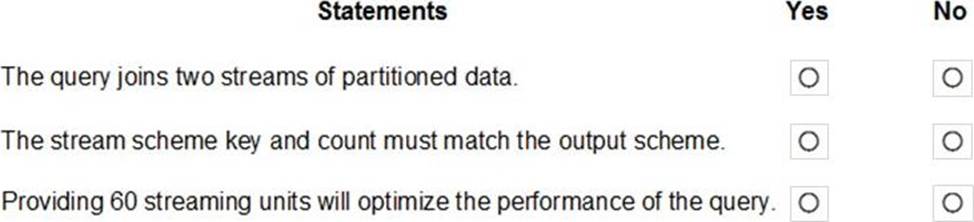

HOTSPOT

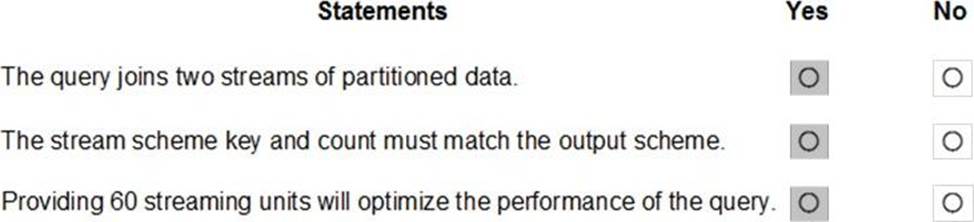

You have the following Azure Stream Analytics query.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

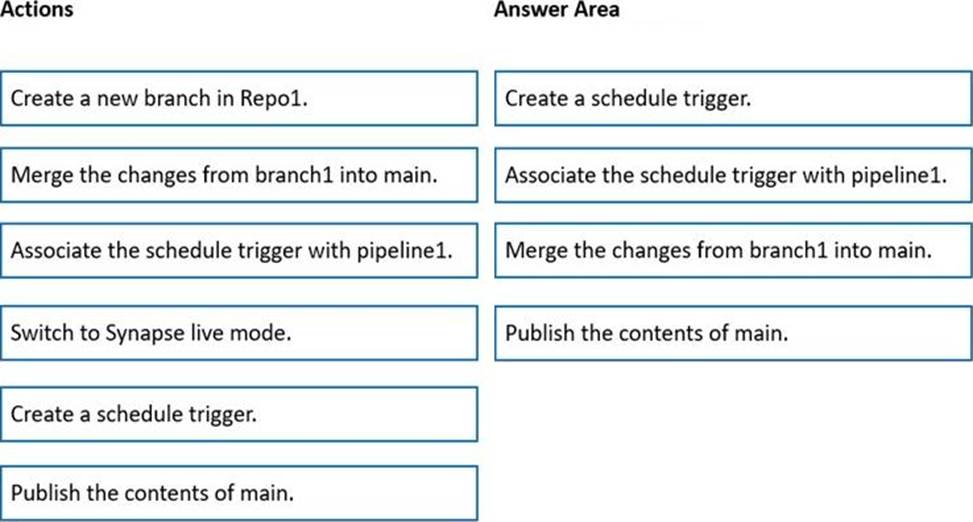

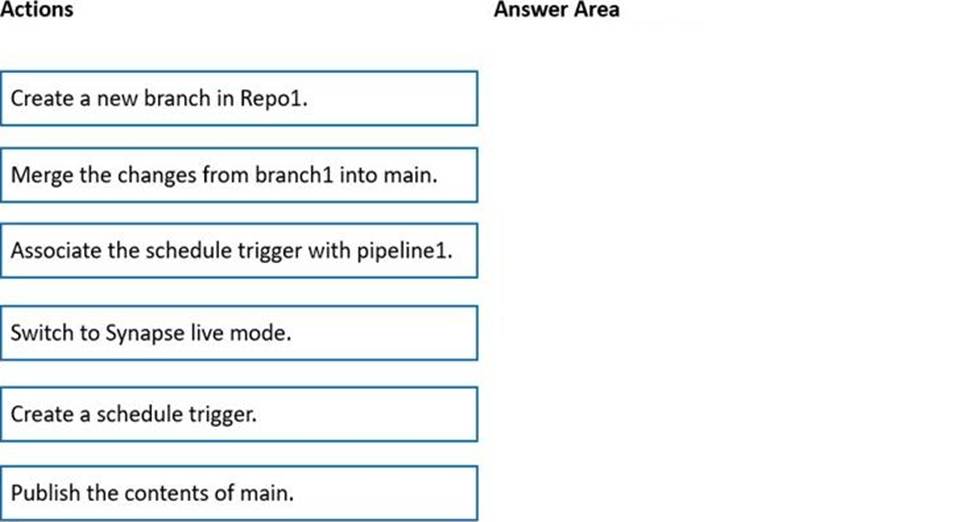

DRAG DROP

You have an Azure subscription that contains an Azure Synapse Analytics workspace named workspace1. Workspace1 connects to an Azure DevOps repository named repo1. Repo1 contains a collaboration branch named main and a development branch named branch1. Branch1 contains an Azure Synapse pipeline named pipeline1.

In workspace1, you complete testing of pipeline1.

You need to schedule pipeline1 to run daily at 6 AM.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

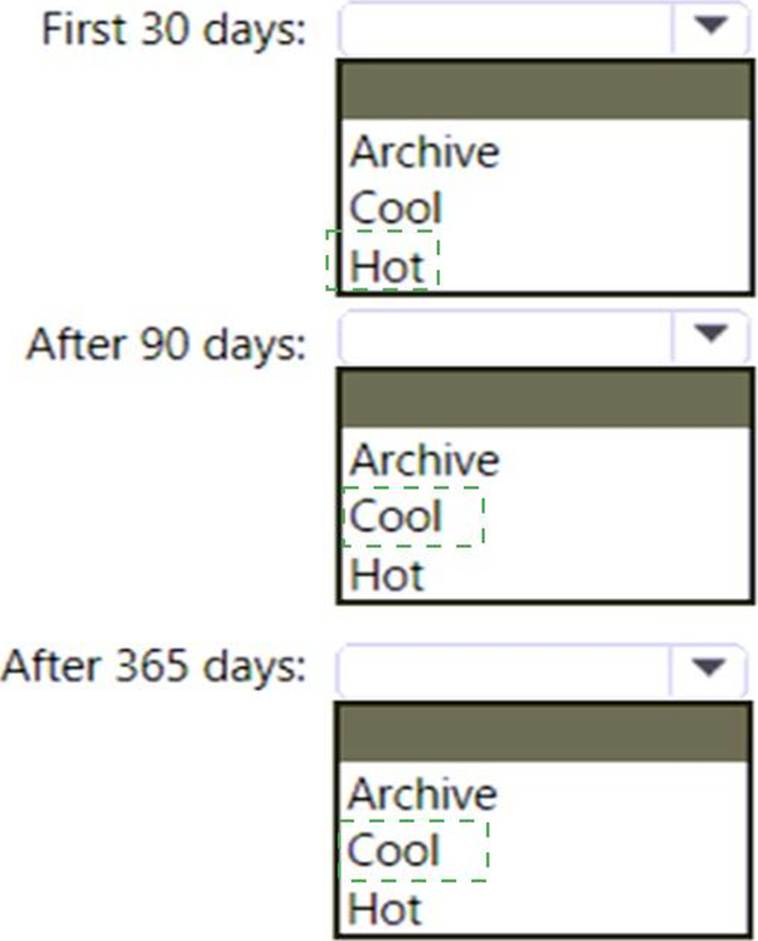

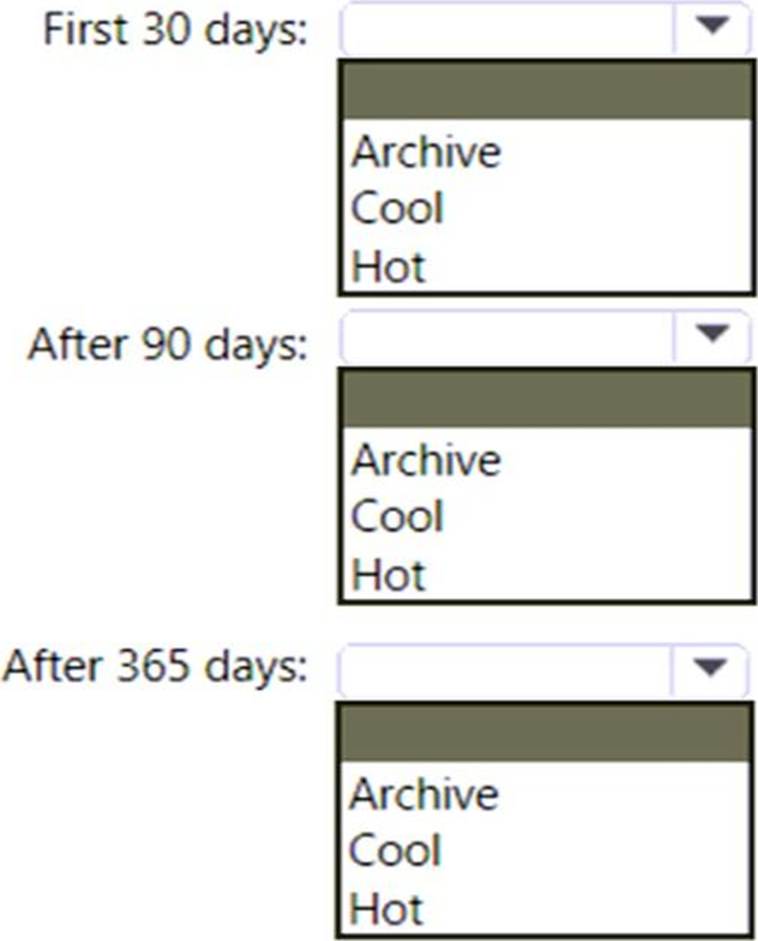

HOTSPOT

You are designing an application that will use an Azure Data Lake Storage Gen 2 account to store petabytes of license plate photos from toll booths. The account will use zone-redundant storage (ZRS).

You identify the following usage patterns:

• The data will be accessed several times a day during the first 30 days after the data is created. The data must meet an availability SU of 99.9%.

• After 90 days, the data will be accessed infrequently but must be available within 30 seconds.

• After 365 days, the data will be accessed infrequently but must be available within five minutes.

HOTSPOT

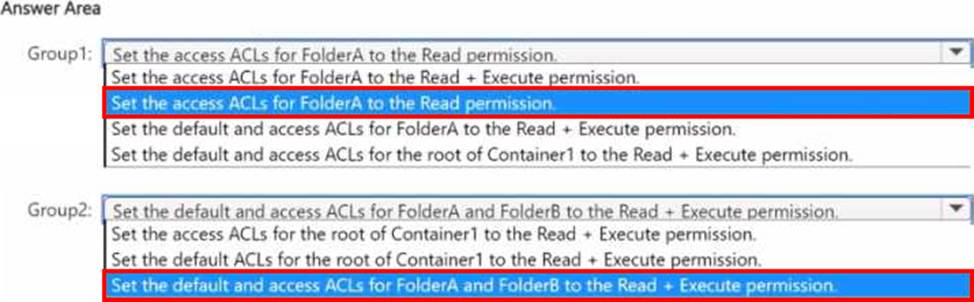

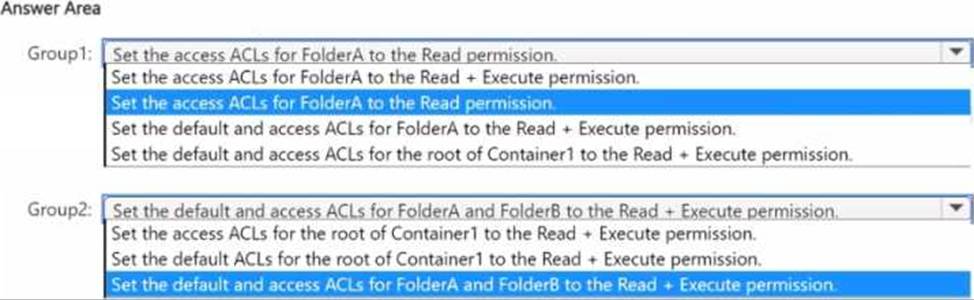

You have an Azure Data Lake Storage Gen2 account named account1 that contains a container named Container"1. Container1 contains two folders named FolderA and FolderB.

You need to configure access control lists (ACLs) to meet the following requirements:

• Group1 must be able to list and read the contents and subfolders of FolderA.

• Group2 must be able to list and read the contents of FolderA and FolderB.

• Group2 must be prevented from reading any other folders at the root of Container1.

How should you configure the ACL permissions for each group? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

HOTSPOT

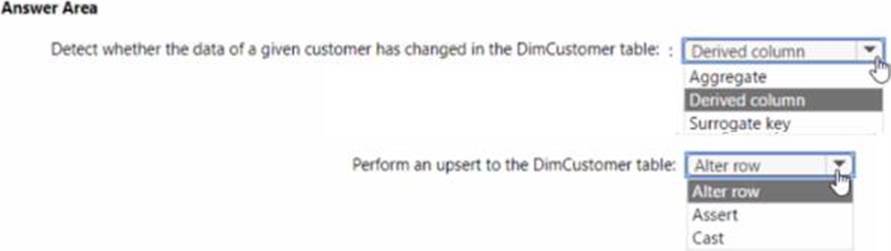

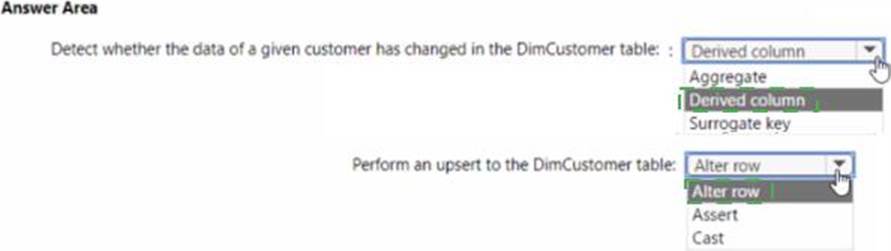

You are developing an Azure Synapse Analytics pipeline that will include a mapping data flow named Dataflow1. Dataflow1 will read customer data from an external source and use a Type 1 slowly changing dimension (SCO) when loading the data into a table named DimCustomer1 in an Azure Synapse Analytics dedicated SQL pool.

You need to ensure that Dataflow1 can perform the following tasks:

* Detect whether the data of a given customer has changed in the DimCustomer table.

• Perform an upsert to the DimCustomer table.

Which type of transformation should you use for each task? To answer, select the appropriate options in the answer area. NOTE; Each correct selection is worth one point.